Classify Text into Labels

Tagging means labeling a document with classes such as:

- Sentiment

- Language

- Style (formal, informal etc.)

- Covered topics

- Political tendency

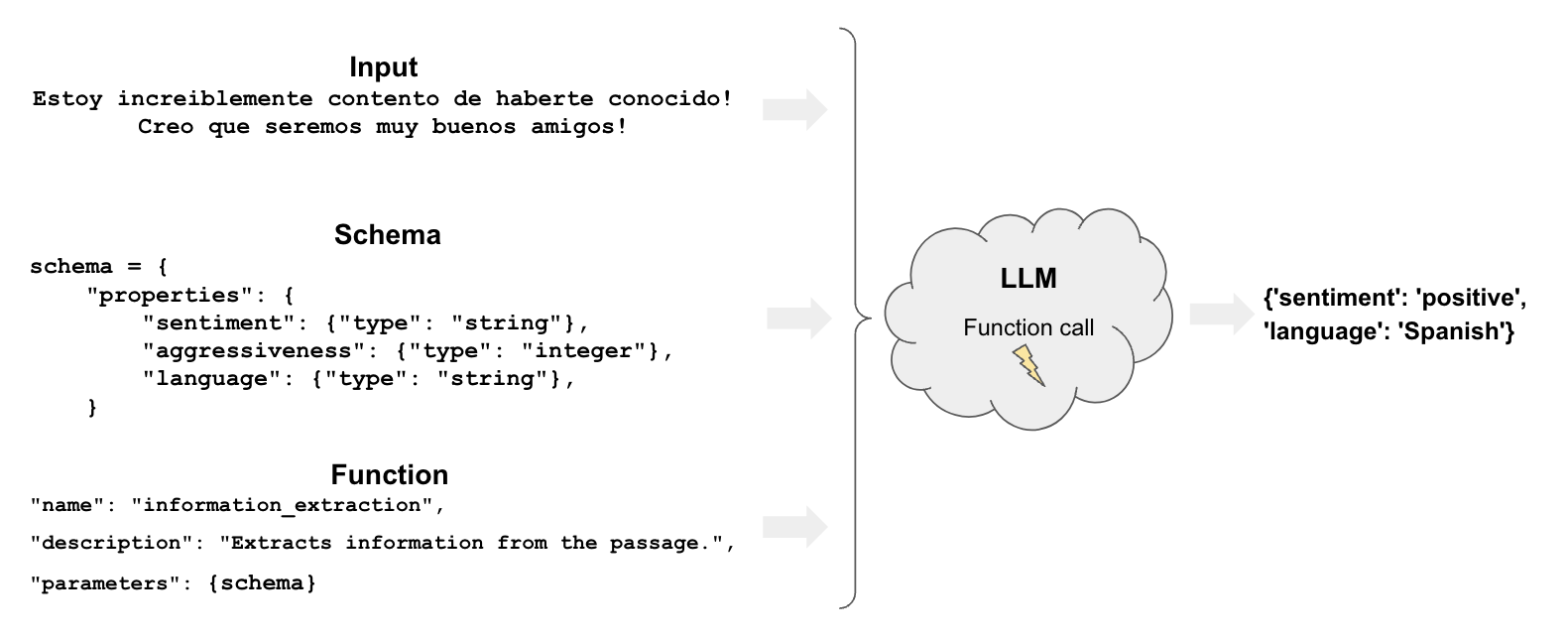

Overview

Tagging has a few components:

function: Like extraction, tagging uses functions to specify how the model should tag a documentschema: defines how we want to tag the document

Quickstart

Let's see a very straightforward example of how we can use OpenAI tool calling for tagging in LangChain. We'll use the with_structured_output method supported by OpenAI models.

%pip install --upgrade --quiet langchain-core

We'll need to load a chat model:

- OpenAI

- Anthropic

- Azure

- AWS

- Cohere

- NVIDIA

- FireworksAI

- Groq

- MistralAI

- TogetherAI

pip install -qU langchain-openai

import getpass

import os

os.environ["OPENAI_API_KEY"] = getpass.getpass()

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-4o-mini")

pip install -qU langchain-anthropic

import getpass

import os

os.environ["ANTHROPIC_API_KEY"] = getpass.getpass()

from langchain_anthropic import ChatAnthropic

llm = ChatAnthropic(model="claude-3-5-sonnet-20240620")

pip install -qU langchain-openai

import getpass

import os

os.environ["AZURE_OPENAI_API_KEY"] = getpass.getpass()

from langchain_openai import AzureChatOpenAI

llm = AzureChatOpenAI(

azure_endpoint=os.environ["AZURE_OPENAI_ENDPOINT"],

azure_deployment=os.environ["AZURE_OPENAI_DEPLOYMENT_NAME"],

openai_api_version=os.environ["AZURE_OPENAI_API_VERSION"],

)

pip install -qU langchain-google-vertexai

# Ensure your VertexAI credentials are configured

from langchain_google_vertexai import ChatVertexAI

llm = ChatVertexAI(model="gemini-1.5-flash")

pip install -qU langchain-aws

# Ensure your AWS credentials are configured

from langchain_aws import ChatBedrock

llm = ChatBedrock(model="anthropic.claude-3-5-sonnet-20240620-v1:0",

beta_use_converse_api=True)

pip install -qU langchain-cohere

import getpass

import os

os.environ["COHERE_API_KEY"] = getpass.getpass()

from langchain_cohere import ChatCohere

llm = ChatCohere(model="command-r-plus")

pip install -qU langchain-nvidia-ai-endpoints

import getpass

import os

os.environ["NVIDIA_API_KEY"] = getpass.getpass()

from langchain_nvidia_ai_endpoints import ChatNVIDIA

llm = ChatNVIDIA(model="meta/llama3-70b-instruct")

pip install -qU langchain-fireworks

import getpass

import os

os.environ["FIREWORKS_API_KEY"] = getpass.getpass()

from langchain_fireworks import ChatFireworks

llm = ChatFireworks(model="accounts/fireworks/models/llama-v3p1-70b-instruct")

pip install -qU langchain-groq

import getpass

import os

os.environ["GROQ_API_KEY"] = getpass.getpass()

from langchain_groq import ChatGroq

llm = ChatGroq(model="llama3-8b-8192")

pip install -qU langchain-mistralai

import getpass

import os

os.environ["MISTRAL_API_KEY"] = getpass.getpass()

from langchain_mistralai import ChatMistralAI

llm = ChatMistralAI(model="mistral-large-latest")

pip install -qU langchain-openai

import getpass

import os

os.environ["TOGETHER_API_KEY"] = getpass.getpass()

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(

base_url="https://api.together.xyz/v1",

api_key=os.environ["TOGETHER_API_KEY"],

model="mistralai/Mixtral-8x7B-Instruct-v0.1",

)

Let's specify a Pydantic model with a few properties and their expected type in our schema.

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

from pydantic import BaseModel, Field

tagging_prompt = ChatPromptTemplate.from_template(

"""

Extract the desired information from the following passage.

Only extract the properties mentioned in the 'Classification' function.

Passage:

{input}

"""

)

class Classification(BaseModel):

sentiment: str = Field(description="The sentiment of the text")

aggressiveness: int = Field(

description="How aggressive the text is on a scale from 1 to 10"

)

language: str = Field(description="The language the text is written in")

# LLM

llm = ChatOpenAI(temperature=0, model="gpt-4o-mini").with_structured_output(

Classification

)

inp = "Estoy increiblemente contento de haberte conocido! Creo que seremos muy buenos amigos!"

prompt = tagging_prompt.invoke({"input": inp})

response = llm.invoke(prompt)

response

Classification(sentiment='positive', aggressiveness=1, language='Spanish')

If we want dictionary output, we can just call .dict()

inp = "Estoy muy enojado con vos! Te voy a dar tu merecido!"

prompt = tagging_prompt.invoke({"input": inp})

response = llm.invoke(prompt)

response.dict()

{'sentiment': 'enojado', 'aggressiveness': 8, 'language': 'es'}

As we can see in the examples, it correctly interprets what we want.

The results vary so that we may get, for example, sentiments in different languages ('positive', 'enojado' etc.).

We will see how to control these results in the next section.

Finer control

Careful schema definition gives us more control over the model's output.

Specifically, we can define:

- Possible values for each property

- Description to make sure that the model understands the property

- Required properties to be returned

Let's redeclare our Pydantic model to control for each of the previously mentioned aspects using enums:

class Classification(BaseModel):

sentiment: str = Field(..., enum=["happy", "neutral", "sad"])

aggressiveness: int = Field(

...,

description="describes how aggressive the statement is, the higher the number the more aggressive",

enum=[1, 2, 3, 4, 5],

)

language: str = Field(

..., enum=["spanish", "english", "french", "german", "italian"]

)

tagging_prompt = ChatPromptTemplate.from_template(

"""

Extract the desired information from the following passage.

Only extract the properties mentioned in the 'Classification' function.

Passage:

{input}

"""

)

llm = ChatOpenAI(temperature=0, model="gpt-4o-mini").with_structured_output(

Classification

)

Now the answers will be restricted in a way we expect!

inp = "Estoy increiblemente contento de haberte conocido! Creo que seremos muy buenos amigos!"

prompt = tagging_prompt.invoke({"input": inp})

llm.invoke(prompt)

Classification(sentiment='positive', aggressiveness=1, language='Spanish')

inp = "Estoy muy enojado con vos! Te voy a dar tu merecido!"

prompt = tagging_prompt.invoke({"input": inp})

llm.invoke(prompt)

Classification(sentiment='enojado', aggressiveness=8, language='es')

inp = "Weather is ok here, I can go outside without much more than a coat"

prompt = tagging_prompt.invoke({"input": inp})

llm.invoke(prompt)

Classification(sentiment='neutral', aggressiveness=1, language='English')

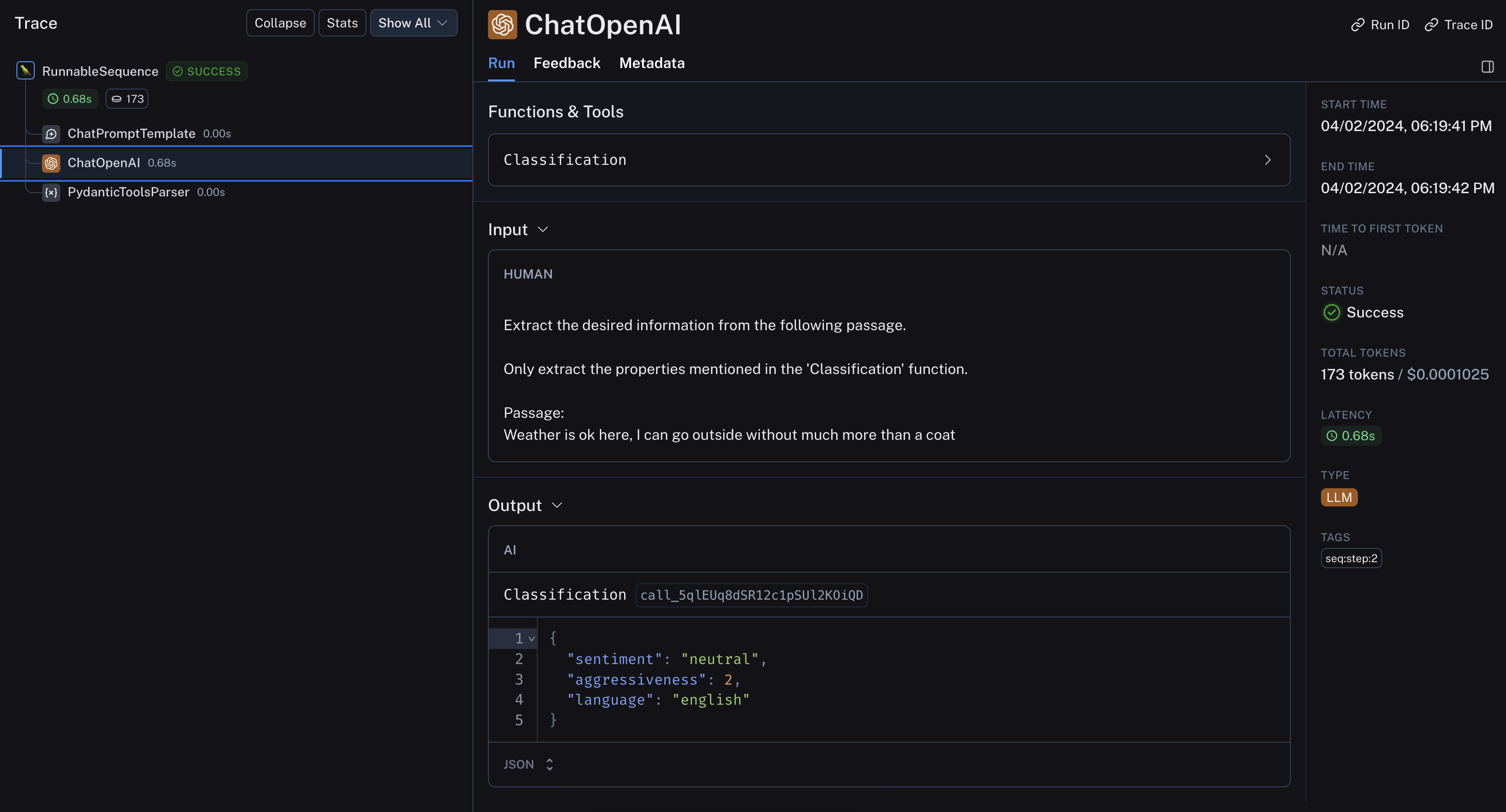

The LangSmith trace lets us peek under the hood:

Going deeper

- You can use the metadata tagger document transformer to extract metadata from a LangChain

Document. - This covers the same basic functionality as the tagging chain, only applied to a LangChain

Document.